In the last post I concluded with a brief discussion of Contribution Margin as a key KPI. Recall:

Contribution Margin = Variable Profits – Sales and Marketing Cost

The higher the contribution margin, the more dollars available towards covering G&A. Once contribution margin exceeds G&A, a company reaches operating profits. For simplicity in this post, I’ll use gross margin (GM) as the definition of variable profits even though there may be other costs that vary directly with revenue.

The Drivers of Contribution Margin (CM)

There is an absolute correlation between GM percent and CM. Very high gross margin companies will, in general, get to strong contribution margins and low gross margin companies will struggle to get there. But the sales and marketing needed to drive growth is just as important. There are several underlying factors in how much needs to be spent on sales and marketing to drive growth:

- The profits on a new customer relative to the cost of acquiring her (or him). That is, the CAC (customer acquisition cost) for customers derived from paid advertising compared to the profits on those customers’ first purchase

- The portion of new traffic that is “free” from SEO (search engine optimization), PR, existing customers recommending your products, etc.

- The portion of revenue that comes from repeat customers

The Relationship Between CAC and First Purchase Profits Has a Dramatic Impact on CM

Suppose Company A spends $60 to acquire a customer and has GM of $90 on the initial purchase by that customer. The contribution margin will already be positive $30 without accounting for customers that are organic or those that are repeat customers; in other words, this tends to be extremely positive! Of course, the startups I see in eCommerce are rarely in this situation but those that are can get to profitability fairly quickly if this relationship holds as they scale.

It would be more typical for companies to find that the initial purchase GM only covers a portion of CAC but that subsequent purchases lead to a positive relationship between the LTV (life time value) of the customer and CAC. If I assume the spend to acquire a customer is $60 and the GM is $30 then the CM on the first purchase would be negative (-$30), and it would take a second purchase with the same GM dollars to cover that initial cost. Most startups require several purchases before recovering CAC which in turn means requiring investment dollars to cover the outlay.

Free Traffic and Contribution Margin

If a company can generate a high proportion of free/organic traffic, there is a benefit to contribution margin. CAC is defined as the marketing spend divided by the number of new customers derived from this spend. Blended CAC is defined as the marketing spend divided by all customers who purchased in the period. The more organically generated and return customers, the lower the “blended CAC”. Using the above example, suppose 50% of the new customers for Company A come from organic (free) traffic. Then the “blended CAC“ would be 50% of the paid CAC. In the above example that would be $30 instead of $60 and if the GM was only $30 the initial purchase would cover blended CAC.

Of course, in addition to obtaining customers for free from organic traffic, companies, as they build their customer base, have an increasing opportunity to obtain free traffic by getting existing customers to buy again. So, a company should never forget that maintaining a persistent relationship with customers leads to improved Contribution Margin.

Spending to Drive Higher Growth Can Mean Lower Contribution Margin

Unless the GM on the first purchase a new customer makes exceeds their CAC, there is an inverse relationship between expanding growth and achieving high contribution margin. Think of it this way: suppose that going into a month the likely organic traffic and repeat buyers are somewhat set. Boosting that month’s growth means increasing the number of new paid customers, which in turn makes paid customers a higher proportion of blended CAC and therefore increases CAC. For an example consider the following assumptions for Company B:

- The GM is $60 on an average order of $100

- Paid CAC is $150

- The company will have 1,000 new customers through organic means and 2,000 repeat buyers or $300,000 in revenue with 60% GM ($180,000) from these customers before spending on paid customers

- G&A besides marketing for the month will be $150,000

- Last year Company B had $400,000 in revenue in the same month

- The company is considering the ramifications of targeting 25%, 50% or 100% year-over-year growth

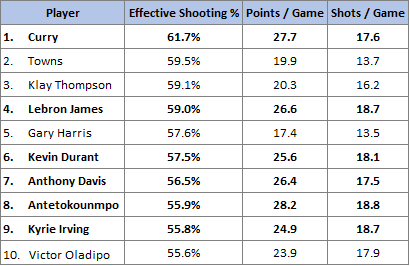

Table 1: The Relationship Between Contribution Margin & Growth

Since the paid CAC is $150 while Gross Margin is only $60 per new customer, each acquired customer generates negative $90 in contribution margin in the period. As can be seen in Table 1, the company would shrink 25% if there is no acquisition spend but would have $180,000 in contribution margin and positive operating profit. On the other end of the spectrum, driving 100% growth requires spending $750,000 to acquire 5,000 new customers and results in a negative $270,000 in contribution margin and an Operating Loss of $420,000 in the period. Of course, if new customers are expected to make multiple future purchases than the number of repeat customers would rise in future periods.

Subscription Models Create More Consistency but are not a Panacea

When a company’s customers are monthly subscribers, each month starts with the prior month’s base less churn. To put it another way, if churn from the prior month is modest (for example 5%) then that month already has 95% of the prior months revenue from repeat customers. Additionally, if the company increases the average invoice value from these customers, it might even have a starting point where return customers account for as much revenue as the prior month. For B-to-B companies, high revenue retention is the norm, where an average customer will pay them for 10 years or more.

Consumer ecommerce subscriptions typically have much more substantial churn, with an average life of two years being closer to the norm. Additionally, the highest level of churn (which can be as much as 30% or more) occurs in the second month, and the next highest, the third month before tapering off. What this means is that companies trying to drive high sequential growth will have a higher % churn rate than those that target more modest growth. Part of a company’s acquisition spend is needed just to stay even. For example, if we assume all new customers come from paid acquisition, the CAC is $200, and that 15% of 10,000 customers churn then the first $300,000 in marketing spend would just serve to replace the churned customers and additional spend would be needed to drive sequential growth.

Investing in Companies with High Contribution Margin

As a VC, I tend to appreciate strong business models and like to invest after some baseline proof points are in place. In my last post I outlined a number of metrics that were important ways to track a company’s health with the ratio of LTV (life time value) to CAC being one of the most important. When a company has a high contribution margin they have the time to build that ratio by adding more products or establishing subscriptions without burning through a lot of capital. Further, companies that have a high LTV/CAC ratio should have a high contribution margin as they mature since this usually means customers buy many times – leading to an expansion in repeat business as part of each month’s total revenue.

This thought process also applies to public companies. One of the most extreme is Facebook, which I’ve owned and recommended for five years. Even after the recent pullback its stock price is about 7x what it was five years ago (or has appreciated at a compound rate of nearly 50% per year since I’ve been recommending it). Not a surprise as Facebook’s contribution margin runs over 70% and revenue was up year/year 42% in Q2. These are extraordinary numbers for a company its size.

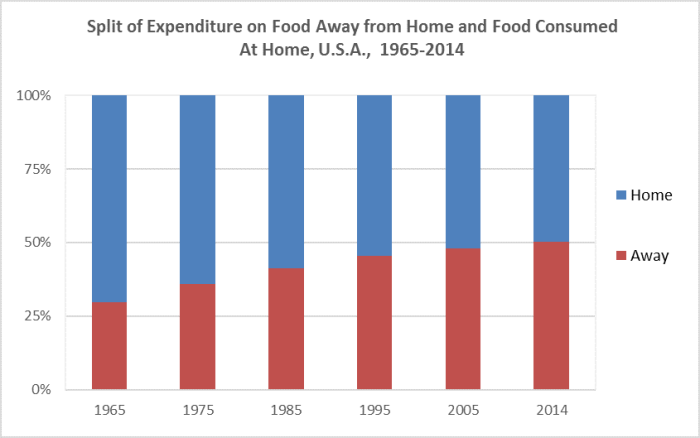

To give the reader some idea of how this method can be used as one screen for public companies, Table 2 shows gross margin, contribution margin, revenue growth and this year’s stock market performance for seven public companies.

Table 2: Public Company Contribution Margin Analysis

Two of the seven companies shown stand out as having both high Contribution Margin and strong revenue growth: Etsy and Stitch Fix. Each had year/year revenue growth of around 30% in Q2 coupled with 44% and 29% contribution margins, respectively. This likely has been a factor in Stitch Fix stock appreciating 53% and Etsy 135% since the beginning of the year.

Three of the seven have weak models and are struggling to balance revenue growth and contribution margin: Blue Apron, Overstock, and Groupon. Both Blue Apron and Groupon have been attempting to reduce their losses by dropping their marketing spend. While this increased their CM by 10% and 20% respectively, it also meant that they both have negative growth while still losing money. The losses for Blue Apron were over 16% of revenue. This coupled with shrinking revenue feels like a lethal combination. Blue Apron stock is only down a marginal amount year-to-date but is 59% lower than one year ago. Groupon, because of much higher gross margins than Blue Apron (52% vs 35%), still seems to have a chance to turn things around, but does have a lot of work to do. Overstock went in the other direction, increasing marketing spend to drive modest revenue growth of 12%. But this led to a negative CM and substantially increased losses. That strategy did not seem to benefit shareholders as the stock has declined 53% since the beginning of the year.

eBay is a healthy company from a contribution margin point of view but has sub 10% revenue growth. I can’t tell if increasing their market spend by a substantial amount (at the cost of lower CM) would be a better balance for them.

For me, Spotify is the one anomaly in the table as its stock has appreciated 46% since the IPO despite weak contribution margins which was one reason for my negative view expressed in a prior post. I think that is driven by three reasons: its product is an iconic brand; there is not a lot of float in the stock creating some scarcity; and contribution margin has been improving giving bulls on the stock a belief that it can get to profitability eventually. I say it is an anomaly, as comparing it to Facebook, it is hard to justify the relative valuations. Facebook grew 42% in Q2, Spotify 26%; Facebook is trading at a P/E of 24 whereas even if we assume Spotify can eventually get to generating 6% net profit (it currently is at a 7% loss before finance charges and 31% loss after finance charges, so this feels optimistic) Spotify would be trading at 112 times this theoretic future earnings.

SoundBytes

I found the recent controversy over Elon Musk’s sharing his thoughts on taking Tesla private interesting. On the one hand, people want transparency from companies and Elon certainly provides that! On the other hand, it clearly impacted the stock price for a few days and the SEC abhors anything that can be construed as stock manipulation. Of course, Elon may not have been as careful as he should have been when he sent out his tweet regarding whether financing was lined up…but like most entrepreneurs he was optimistic.